Just Act Natural

18 Jan 2022

It's in the name: the goal of our field of Natural Language Processing (NLP) is to process the natural language us humans use in our day-to-day lives. Internally, machines use models of language in order to process its meaning. These models are currently mainly driven by statistical relationships occurring in enormous amounts of training data. We have come far using these methods, but if you are part of a "niche" language community (think languages with fewer speakers, dialects, sociolects depending on who you communicate with etc.), you will definitely have come across moments when these models tend to break down.

NLP researchers are working hard towards more robustness and better coverage, but the data-hungry models used today will only get us so far. This year's Conference on Empirical Methods for Natural Language Processing (EMNLP) was a great opportunity to share new insights and featured some very interesting work for broadening the scope of current methods to be more inclusive. The key trend here is that NLP benefits from the inclusion of increased language variation at every level.

Sotaing ≠ Progress

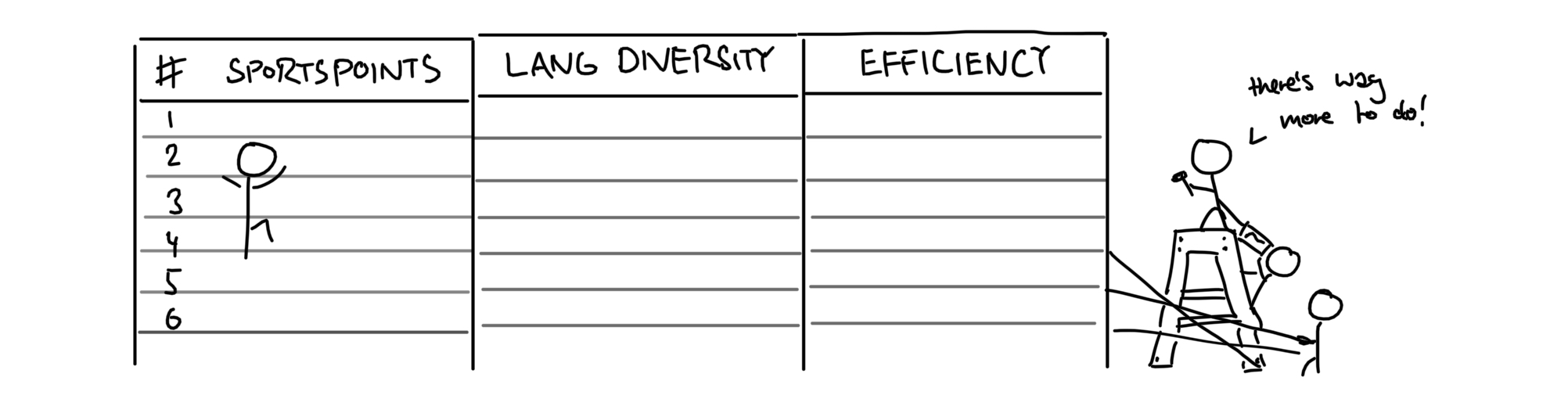

State-of-the-art results (SoTA) are well liked at top-tier conferences such as EMNLP as they provide an empirical estimate of model performance on some dataset as measured by an agreed-upon metric. Benchmark tasks provide collections of datasets on which to compute and compare these scores on leaderboards. Humans are really into these sorts of competitions and push for increasingly better numbers.

This process of sota-ing, as coined in Noah Smith's keynote in the Workshop on Negative Results, is helpful in an engineering context, but does not necessarily reflect interesting research. Setting aside the fact that most often, sotaing involves more data and computing power which only well-funded institutions can afford, the minute modelling differences which contribute similarly small differences in the final scores are often exercises in engineering rather than interesting research. The most interesting research is typically not about sotaing, but rather about asking and maybe even answering questions with the purpose to broaden our knowledge about language — hopefully in a way wich can help improve society.

Nonetheless, NLP is a very applied field and as such most datasets represent tasks which are motivated by some practical need in language use. This goes for high-resource languages such as English as well as the many other low-resource languages which make up the majority of the 7000+ languages in use today.

Steven Bird, who gave the third keynote at EMNLP, is a strong proponent of low-resource NLP. During the talk, he however also pointed out an issue with how this kind of research is currently conducted: A low-resource niche is often seen as having been successfully covered if we solve any particular task for it — for example translating the niche language to English. While this may be an interesting academic exercise, it typically occurs completely detached from the language community in question. Do the translations generated by a model actually reflect the cultural meaning and original intent? Does a language community lacking a formalized writing system even need a model which transcribes their spoken language into some arbitrary textual representation?

Working closely with Aboriginal communities such as the speakers of the Kunwok language, Steven has been investigating these facets of truly natural language processing. He argues that these "low-resource languages" are actually extremely rich in resources if NLP researchers just listen to native-speaking language experts, collaborate with them and develop methods and models which their communities actually need. An example which stuck with me was that oral story-telling traditions do not need exact transcriptions, but that people in these communities are much more interested in putting the core ideas of their stories onto paper. Currently, the research efforts dedicated to either of these tasks is heavily unbalanced into a direction which does not directly benefit the actual language community.

While the NLP community still has a far way to go to reach these goals, I would nonetheless like to highlight four papers from EMNLP which aim at increasing the breadth of NLP to more niche language variations. They each tackle a different layer of NLP, beginning with characters and ending on datasets and show how each level benefits from more diversity and not just more data.

The Keynotes

Smith, N., 2021. What Makes a Result Negative. The Second Workshop on Insights from Negative Resuts in NLP.

Bird, S., 2021. LT4All!? Rethinking the Agenda. The 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP).

The Character Level

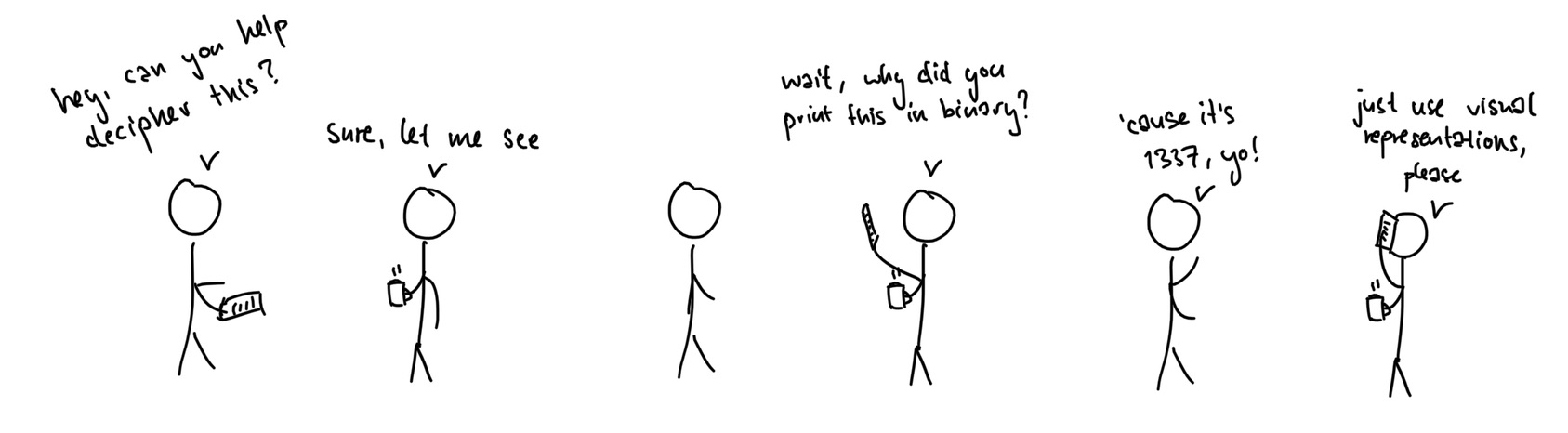

ln natural, written Iangauge, we often encounter typos or other misspeIIings which humans simpIy read over. The previous sentence for example misspells "language" and replaces all lowercase "L" with uppercase "I". While this is less of an issue for humans, machines read language in a fundamentally different way and struggle with any character sequence that is non-standard. For a computer each character does not represent a shape, but rather a unique piece of information — typically denoted by a number which in turn is represented as a byte of 0s and 1s. For humans the difference between "l" and "I" may be miniscule, but for a machine, these are "01101100" and "01001001" — two numbers separated by 35 entries representing completely distinct pieces of information.

In one of my favourite papers from the conference, Salesky and colleagues propose to render text as images (just like a black-and-white screen) and replace the character-level input of their model with a Convolutional Neural Network (CNN) for visual input. The remainder of their model remains the same as other contemporary NLP methods — only the character-input is now represented on a continuum instead of discretely.

They evaluate their model on various translation tasks and show that it maintains performance on standard tasks compared to standard input models, but outperforms them on noisy input. As a cherry on top, they further show how their method is able to translate ancient Latin texts converted to modern haxor "leetspeak". 1337!

The Paper

Salesky, E., Etter, D. and Post, M., 2021. Robust Open-Vocabulary Translation from Visual Text Representations. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online and Punta Cana, Dominican Republic.

The Word Level

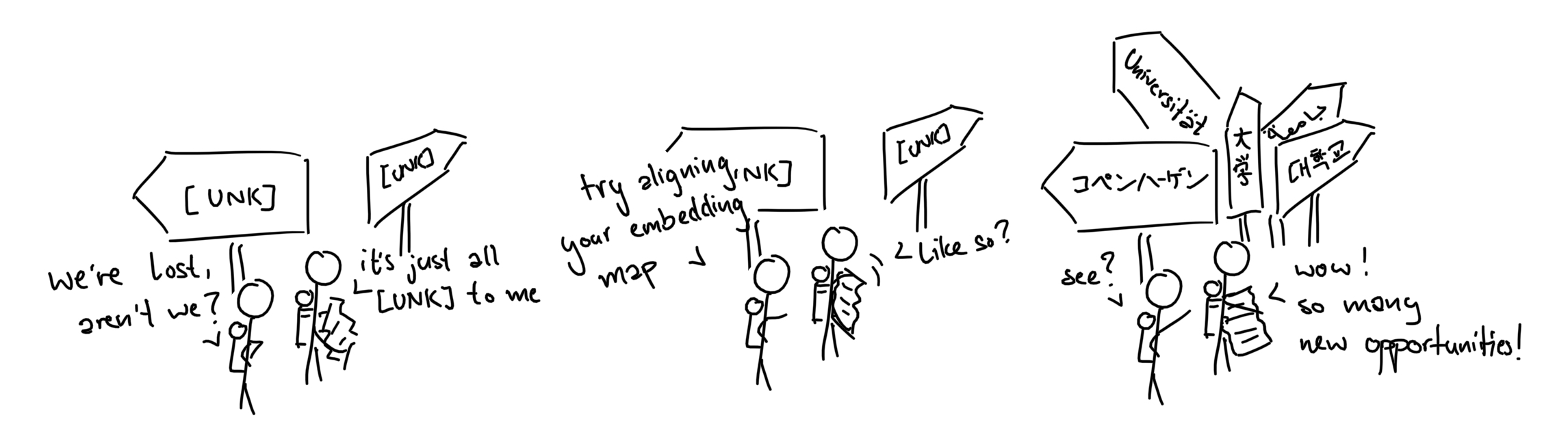

Now that we can read characters, our model needs to associate certain character sequences with certain meanings (this phenomenon is also known as "words"). We explicitly say "character sequences", since most NLP models today split words into their most frequent sub-words (e.g. "sub", "-", "words"). The list of sub-words a model knows is finite and as such it sometimes encounters an unknown character sequence for which it has no representation. These sequences are collapsed into a single "word" known as the UNK-token (i.e. unknown).

For high-resource languages such as English, German, French and even Chinese and Japanese, the percentage of UNKs encountered in practice is typically close to 0%. Since in-language data for the majority of languages is sparse, models trained on such high-resource languages are often directly applied to completely unrelated targets. Here, UNKs become problematic, especially if the target language has an unseen script.

As Pfeiffer and colleagues point out in "UNKs Everywhere", for languages such as Amharic or Khmer, the percentage of words unknown to the model can reach up to 86%, making most NLP infeasible. Imagine being told to translate a text, but 86% of words being blacked out. We could replace some entries in the model's original vocabulary with words from the new language, but without a dictionary, this process would be arbitrary and leave the character sequences without any particular or even the wrong meaning.

In this paper, the authors make use of the fact that some vocabulary items still overlap: even Amharic still has around 13% lexical overlap. These items are frequently parts of names, e.g. "university" or "international". By using these matches and the fact that a model's word representations can be decomposed into smaller bits of different linguistic information, they reassemble new word representations from the old. Essentially, they align the source and target vocabulary using the few words which overlap and then use specific subspaces of knowledge which the model has already learned to fill the remaining gaps. In a way, this is analogous to grasping onto some known words in a new language and beginning to figure out more words based on this overlap as you go along.

The Paper

Pfeiffer, J., Vulić, I., Gurevych I. and Ruder, S., 2021. UNKs Everywhere: Adapting Multilingual Language Models to New Scripts. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online and Punta Cana, Dominican Republic.

The Sentence Level

Words in hand, we can now start stringing them together into sentences. Most NLP tasks today happen at the sentence level with the assumption that most of the relevant linguistic information is contained within this sequence of words. A useful way to work with this information at a base level is through the syntactic dependencies between all words. For example, we can infer who did what to whom by connecting the subject and the object of the sentence to the central predicate. All of these connections together result in a graph which is a useful starting point for more complex processing. This task is fittingly called dependency parsing and its linguistic and computational properties are particularly helpful when large amounts of data are unavailable.

It can still be difficult to train dependency parsers for extremely low-resource languages and in such cases, transfer learning is often involved. In zero-shot transfer learning, the idea is to train a model on lots of out-of-language data and then pray that it also works on the target language despite neve having seen it. The typical paradigm here is that the more data you train on the better.

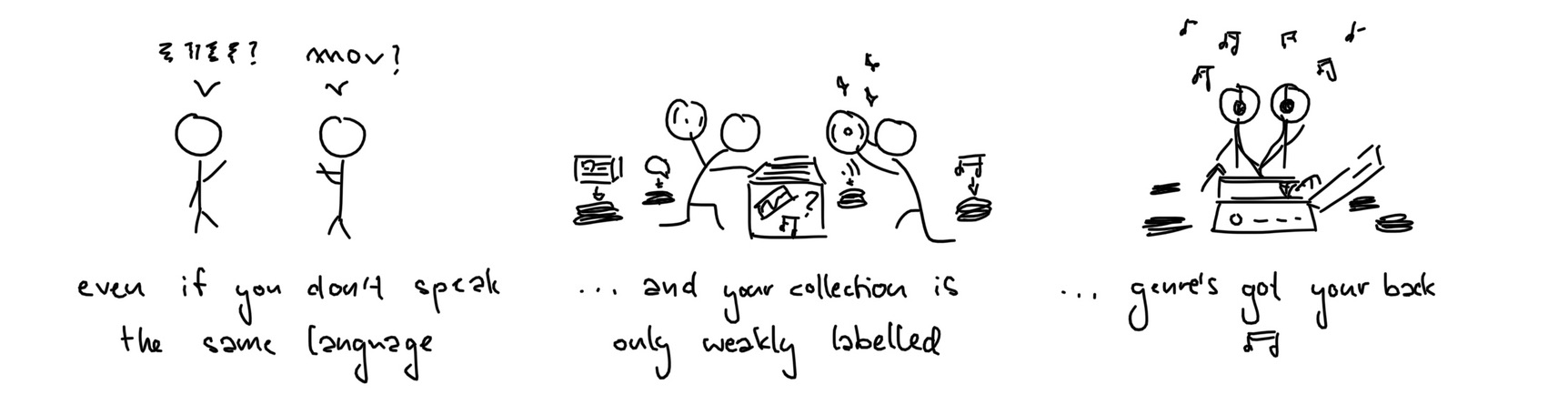

As we said in the beginning, language lives on a large spectrum of variation. Just because a model trained on German news data somewhat works on Dutch news data, it does not mean that we have "solved Dutch". In our EMNLP paper "Genre as Weak Supervision for Cross-lingual Dependency Parsing", we take a first careful look at how strong of a role the variational dimension of genre plays across languages.

We train models for parsing low-resource targets such as transcribed spoken Chukchi and Swedish Sign Language as well as Hindi-English tweets or Turkish-German. From the 1.4 million sentences available to us, we would ideally like to pick the most relevant ones for the target. For spoken data, we might want to only train on other spoken data, since properties like filler words or the abrupt ending of sentences may be shared across languages.

The Universal Dependencies dataset, which we use, is amazing when it comes to language diversity (122 and counting!), but genre is only weakly defined. Essentially, we are given around 200 bags containing sentences from multiple genres, but each bag's tag only lists what genres should be in there, not which sentence belongs to which. Since we are still working on learning all 122 languages, we first need some NLP to sort sentences by genre across all languages. Previous approaches which sort all sentences from all languages at once did not work, because they are distracted by language similarity instead of focusing on more subtle genre similarities. For this many languages, we discovered that what works best is first sorting within each bag (subtler differences are more pronounced within bags than across), then finding shared representations to compare our sorted bags across languages, and finally choosing the sorted sentences from each bag which are closest to the target genre.

This targeted approach for niche languages worked significantly better than just throwing all data at the problem — reducing the computational workload by up to 8 times while achieving better results. Genre is still just one facet of language variation, but hopefully the NLP community can chip away at more dimensions in order to increase the breadth of coverage for all kinds of natural language.

The Paper

Müller-Eberstein, M., van der Goot, R. and Plank, B., 2021. Genre as Weak Supervision for Cross-lingual Dependency Parsing. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online and Punta Cana, Dominican Republic.

The Dataset Level

While we saw that bucketloads of data are not everything, data is still the essential ingredient for all our models. In the previous case, we were very luck that the maintainers of the Universal Dependencies dataset have collected contributions from many authors speaking a very diverse pool of languages. Most data today is however dominated by English or similar high-resource languages, meaning that any research for other languages must first jump over the bar of finding or collecting data for their desired target.

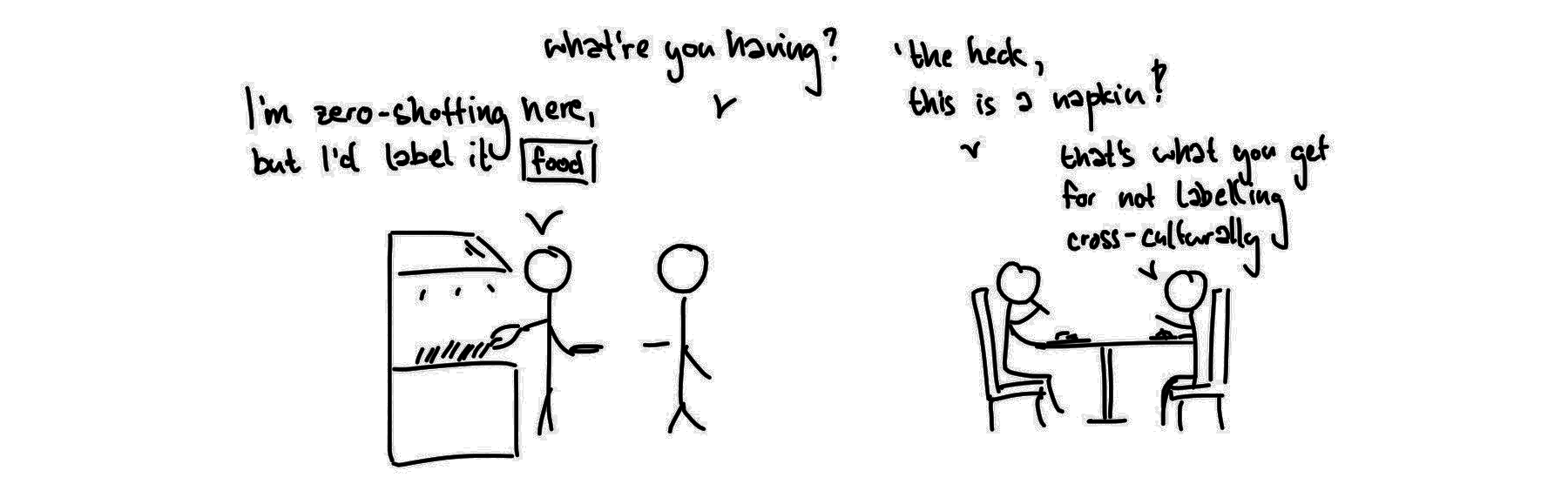

This sparsity increases with each dimension of language you include and explodes when you go cross-modal, looking for example at text and images together. Creating text-image datasets requires a lot of work and therefore typically employs crowd-workers to gather large amounts of annotations relatively quickly. A frequently overlooked issue in these settings is that these annotators are often anglo-centric (even if they are not from English-speaking regions). There have been issues with translations that are not representative of the target language, but are rather an unnatural blend with English — the dreaded "translationese".

The best long paper of EMNLP by Liu and colleagues highlighted this issue for text and image datasets specifically, showing that current annotation methods are insufficient to capture a wider range of cultural diversity. If I were told to write an image caption for a dish that I have never seen before, the best I could probably do is also something like: "I sure hope it's edible".

This is obviously lacking, and as such the authors introduce a new multi-cultural image captioning dataset named "Multicultural Reasoning over Vision and Language", or MaRVL for short. Not only do they share their dataset, but they also meticulously document their creation process such that future researchers can avoid pitfalls which impact the data's cultural diversity. Most interestingly, they do not pre-define a set of images to annotate, but rather have native speakers create an initial set of images of interest to them and their culture. Going even further back, the concepts for which related images are collected are also crowd-sourced from native speakers. This is to ensure that the MaRVL-authors themselves bias the final selection of data as least as possible. Throughout the process, native speakers check each other's annotations to ensure the correctness of the dataset.

In the end, MaRVL contains images with culturally diverse concepts from typologically diverse languages, beginning with Indonesian, Mandarin Chinese, Swahili, Tamil and Turkish and with plenty of potential to be expanded to more.

The Paper

Liu, F., Bugliarello, E., Ponti, E. M., Reddy, S., Collier, N. and Elliot, D., 2021. Visually Grounded Reasoning across Languages and Cultures. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online and Punta Cana, Dominican Republic.

From Low-resource to Riches

Hopefully, these couple samples from this year's EMNLP have left you with the takeaway that there is more to NLP than sotaing for the next best result on an English benchmark. Looking beyond the fringes, we actually see that the impressive large-scale models "solving language" today are actually just processing a tiny subset of truly natural language.

This does not mean that we are lacking in progress towards natural language processing. If we look at all the variation that language has to offer, we find countless niches where current methods break, but which can simultaneously be leveraged to fill the gaps that we might not have even been aware of. I personally hope this trend of finding the hidden resources in these "low-resource" setups will pave the way towards natural language processing which ensures that everyone is understood.